Quantum state tomography¶

One can open this notebook in Google Colab (is recommended)

In this tutorial, we perform quantum state tomography via Riemannian optimization. First two blocks of a code (1. Many-qubit, informationally complete, positive operator-valued measure (IC POVM) and 2. Data set generation (measurement outcomes simulation)) are refered to data generation, third bock dedicated to tomography of a state.

First, one needs to import all necessary libraries.

[1]:

import tensorflow as tf # tf 2.x

from math import sqrt

try:

import QGOpt as qgo

except ImportError:

!pip install git+https://github.com/LuchnikovI/QGOpt

import QGOpt as qgo

import matplotlib.pyplot as plt

from tqdm import tqdm

# Fix random seed to make results reproducable.

tf.random.set_seed(42)

1. Many-qubit, informationally complete, positive operator-valued measure (IC POVM)¶

Before generating measurement outcomes and performing quantum tomography, one needs to introduce POVM describing quantum measurements. For simplicity, we use one-qubit tetrahedral POVM and generalize it on a many-qubit case by taking tensor product between POVM elements, i.e. \(\{M_\alpha\}_{\alpha=1}^4\) is the one-qubit tetrahedral POVM, \(\{M_{\alpha_1}\otimes \dots \otimes M_{\alpha_N}\}_{\alpha_1=1,\dots,\alpha_N=1}^4\) is the many-qubits tetrahedral POVM.

[2]:

# Auxiliary function that returns Kronecker product between two

# POVM elements A and B

def kron(A, B):

"""Kronecker product of two POVM elements.

Args:

A: complex valued tensor of shape (q, n, k).

B: complex valued tensor of shape (p, m, l).

Returns:

complex valued tensor of shape (q * p, n * m, k * l)"""

AB = tf.tensordot(A, B, axes=0)

AB = tf.transpose(AB, (0, 3, 1, 4, 2, 5))

shape = AB.shape

AB = tf.reshape(AB, (shape[0] * shape[1],

shape[2] * shape[3],

shape[4] * shape[5]))

return AB

# Pauli matrices

sigma_x = tf.constant([[0, 1], [1, 0]], dtype=tf.complex128)

sigma_y = tf.constant([[0 + 0j, -1j], [1j, 0 + 0j]], dtype=tf.complex128)

sigma_z = tf.constant([[1, 0], [0, -1]], dtype=tf.complex128)

# All Pauli matrices in one tensor of shape (3, 2, 2)

sigma = tf.concat([sigma_x[tf.newaxis],

sigma_y[tf.newaxis],

sigma_z[tf.newaxis]], axis=0)

# Coordinates of thetrahedron peaks (is needed to build tetrahedral POVM)

s0 = tf.constant([0, 0, 1], dtype=tf.complex128)

s1 = tf.constant([2 * sqrt(2) / 3, 0, -1/3], dtype=tf.complex128)

s2 = tf.constant([-sqrt(2) / 3, sqrt(2 / 3), -1 / 3], dtype=tf.complex128)

s3 = tf.constant([-sqrt(2) / 3, -sqrt(2 / 3), -1 / 3], dtype=tf.complex128)

# Coordinates of thetrahedron peaks in one tensor of shape (4, 3)

s = tf.concat([s0[tf.newaxis],

s1[tf.newaxis],

s2[tf.newaxis],

s3[tf.newaxis]], axis=0)

# One qubit thetrahedral POVM

M = 0.25 * (tf.eye(2, dtype=tf.complex128) + tf.tensordot(s, sigma, axes=1))

n = 2 # number of qubits we experiment with

# M for n qubits (Mmq)

Mmq = M

for _ in range(n - 1):

Mmq = kron(Mmq, M)

2. Data set generation (measurement outcomes simulation).¶

Here we generate a set of measurement outcomes (training set). First of all, we generate a random density matrix that is a target state we want to reconstruct. Then, we simulate measurement outcomes over the target state driven by many-qubits tetrahedral POVM introduced in the previous cell.

[3]:

#-----------------------------------------------------#

num_of_meas = 600000 # number of measurement outcomes

#-----------------------------------------------------#

# random target density matrix (to be reconstructed)

m = qgo.manifolds.DensityMatrix()

A = m.random((2 ** n, 2 ** n), dtype=tf.complex128)

rho_true = A @ tf.linalg.adjoint(A)

# measurements simulation (by using Gumbel trick for sampling from a

# discrete distribution)

P = tf.cast(tf.tensordot(Mmq, rho_true, [[1, 2], [1, 0]]), dtype=tf.float64)

eps = tf.random.uniform((num_of_meas, 2 ** (2 * n)), dtype=tf.float64)

eps = -tf.math.log(-tf.math.log(eps))

ind_set = tf.math.argmax(eps + tf.math.log(P), axis=-1)

# POVM elements came true (data set)

data_set = tf.gather_nd(Mmq, ind_set[:, tf.newaxis])

3. Data processing (tomography)¶

First, we define an example of the density matrices manifold:

[4]:

m = qgo.manifolds.DensityMatrix()

The manifold of density matrices is represneted through the quadratic parametrization \(\varrho = AA^\dagger\) with an equivalence relation \(A\sim AQ\), where \(Q\) is an arbitrary unitary matrix. Thus, we initialize a variable, that represents the parametrization of a density matrix:

[5]:

# random initial paramterization

a = m.random((2 ** n, 2 ** n), dtype=tf.complex128)

# in order to make an optimizer works properly

# one need to turn a to real representation

a = qgo.manifolds.complex_to_real(a)

# variable

a = tf.Variable(a)

Then we initialize Riemannian Adam optimizer:

[6]:

lr = 0.07 # optimization step size

opt = qgo.optimizers.RAdam(m, lr)

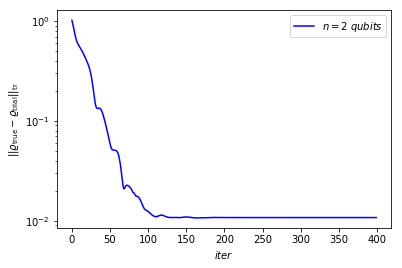

Finally, we ran part of code that calculate forward pass, gradients, and optimization step several times until convergence is reached:

[7]:

# the list will be filled by value of trace distance per iteration

trace_distance = []

for _ in range(400):

with tf.GradientTape() as tape:

# complex representation of parametrization

# shape=(2**n, 2**n)

ac = qgo.manifolds.real_to_complex(a)

# density matrix

rho_trial = ac @ tf.linalg.adjoint(ac)

# probabilities of measurement outcomes

p = tf.tensordot(rho_trial, data_set, [[0, 1], [2, 1]])

p = tf.math.real(p)

# negative log likelihood (to be minimized)

L = -tf.reduce_mean(tf.math.log(p))

# filling trace_distance list (for further plotting)

trace_distance.append(tf.reduce_sum(tf.math.abs(tf.linalg.eigvalsh(rho_trial - rho_true))))

# gradient

grad = tape.gradient(L, a)

# optimization step

opt.apply_gradients(zip([grad], [a]))

Here we plot trace distance vs number of iteration to validate the result

[8]:

plt.plot(trace_distance, 'b')

plt.legend([r'$n=$' + str(n) + r'$\ qubits$'])

plt.yscale('log')

plt.ylabel(r'$||\varrho_{\rm true} - \varrho_{\rm trial}||_{\rm tr}$')

plt.xlabel(r'$iter$')

[8]:

Text(0.5,0,'$iter$')